If you’ve ever taken any applied statistics courses in college, you may have been exposed to the mystique of 30 samples. Too many times I’ve heard statistician do-it-yourselfers tell me that “you need 30 samples for statistical significance.” Maybe that’s what they were taught; maybe that’s how they remember what they were taught. In either case, the statement merits more than a little clarification, starting with the 30-samples part. Suffice it to say that if there were any way to answer the how-many-samples-do-I-need question that simply, you would find it in every textbook on statistics, not to mention TV quiz shows and fortune cookies. Still, if you do an Internet search for “30 samples” you’ll get millions of hits.

If you’ve ever taken any applied statistics courses in college, you may have been exposed to the mystique of 30 samples. Too many times I’ve heard statistician do-it-yourselfers tell me that “you need 30 samples for statistical significance.” Maybe that’s what they were taught; maybe that’s how they remember what they were taught. In either case, the statement merits more than a little clarification, starting with the 30-samples part. Suffice it to say that if there were any way to answer the how-many-samples-do-I-need question that simply, you would find it in every textbook on statistics, not to mention TV quiz shows and fortune cookies. Still, if you do an Internet search for “30 samples” you’ll get millions of hits.

Like many legends, there is some truth behind the myth. The 30-sample rule-of-thumb may have originated with William Gosset, a statistician and Head Brewer for Guinness. In a 1908 article published under the pseudonym Student (Student. 1908. Probable error of a correlation coefficient. Biometrika 6, 2-3, 302–310.), he compared the variation associated with 750 correlation coefficients calculated from sets of 4 and 8 data pairs, and 100 correlation coefficients calculated from sets of 30 data pairs, all drawn from a dataset of 3,000 data pairs. Why did he pick 30 samples? He never said but he concluded, “with samples of 30 … the mean value [of the correlation coefficient] approaches the real value [of the population] comparatively rapidly,” (page 309). That seems to have been enough to get the notion brewing.

Since then, there have been two primary arguments put forward to support the belief that you need 30 samples for a statistical analysis. The first argument is that the t-distribution becomes a close fit for the Normal distribution when the number of samples reaches 30. (The t-distribution, sometimes referred to as Student’s distribution, is also attributable to W. S. Gosset. The t-distribution is used to represent a normally distributed population when there are only a limited number of samples from the population.) That’s a matter of perspective.

This figure shows the difference between the Normal distribution and the t-distribution for 10 to 200 samples. The differences between the distributions are quite large for 10 samples but decrease rapidly as the number of samples increases. The rate of the decrease, however, also diminishes as the number of samples increases. At 30 samples, the difference between the Normal distribution and the t-distribution (at 95% of the upper tail) is about 3½%. At 60 samples, the difference is about 1½%. At 120 samples, the difference is less than 1%. So from this perspective, using 30 samples is better than 20 samples but not as good as 40 samples. Clearly, there is no one magic number of samples that you should use based on this argument.

The second argument is based on the Law of Large Numbers, which in essence says that the more samples you use the closer your estimates will be to the true population values. This sounds a bit like what Gosset said in 1908, and in fact, the Law of Large numbers was 200 years old by that time.

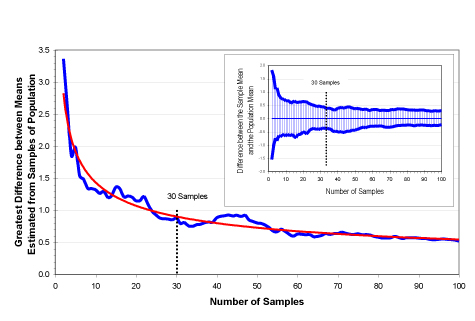

This figure shows how differences between means estimated from different numbers of samples compare to the population mean. (These data were generated by creating a normally distributed population of 10,000 values, then drawing at random 100 sets of values for each number of samples from 2 to 100 (i.e., 100 datasets containing 2 samples, 100 datasets containing 3 samples, and so on up to 100 datasets containing 100 samples. Then, the mean of the datasets was calculated for each number of samples. Unlike Gosset, I got to use a computer and some expensive statistical software.) The small inset graph shows the largest and smallest means calculated for datasets of each sample size. The large graph shows the difference between the largest mean and the smallest mean calculated for each sample size. These graphs show that estimates of the mean from a sampled population will become more precise as the sample size increases (i.e., the Law of Large Numbers). The important thing to note is that the precision of the estimated means increases very rapidly up to about ten samples then continues to increase, albeit at a decreasing rate. Even with more than 70 or 80 samples, the spread of the estimates continues to decrease. So again, there’s nothing extraordinary about using 30 samples.

So while Gosset may have inadvertently started the 30-samples tale, you have to give him a lot of credit for doing all those calculations with pencil and paper. To William Gosset, I raise a pint of Guinness.

Now we still have to deal with that how-many-samples-do-I-need question. As it turns out, the number of samples you’ll need for a statistical analysis really all comes down to resolution. Needless to say, that’s a very unsatisfying answer compared to … 30 samples.

Read more about using statistics at the Stats with Cats blog. Join other fans at the Stats with Cats Facebook group and the Stats with Cats Facebook page. Order Stats with Cats: The Domesticated Guide to Statistics, Models, Graphs, and Other Breeds of Data Analysis at Wheatmark, amazon.com, barnesandnoble.com, or other online booksellers.

one sample, 30 observations.

What is this recent fascination people have with conflating the terms sample and observation?

As Gosset says “samples of 30“, not “30 samples”

“Sample” is one of those English words that have several meanings both in and outside of statistics. Sample can be a noun or a verb. As a noun, it can refer to both individual entities from a statistical population and a collection of those entities. Sample is used in the individual sense by many disciplines, especially when referring to a physical portion, like a soil sample or a blood sample.

I would still agree with efrique that in statistics, it helps to reserve the word sample to strictly mean a subset of a population. (either a proper population when sampling without replacement or a “super-population” when sampling with replacement).

Pingback: Ten Fatal Flaws in Data Analysis | Stats With Cats Blog

Pingback: Stats With Cats Blog: 2010 in review | Stats With Cats Blog

Pingback: Limits of Confusion | Stats With Cats Blog

Really nice post! Is there a function that approximates the red line in the last chart? Perhaps it could be used to make a function that will determine the required sample size, given the desired confidence of the mean?

Love the web page. Long ago in my college days I remember learning this concept. I worked for a while with a commercial real estate appraiser and noticed that at that time they used sample sizes of 3 to 5 “comps”. To me that seemed to allow a great deal of skew and violate this principle of sampling. It was at that time an accepted practice. What is your thought on this?

Pingback: Searching for Answers | Stats With Cats Blog

Pingback: Dare to Compare – Part 1 | Stats With Cats Blog

Pingback: Dare to Compare – Part 2 | Stats With Cats Blog

Pingback: DARE TO COMPARE – PART 3 | Stats With Cats Blog

Pingback: EL MITO DE LA MUESTRA DE 30 UNIDADES